Ok, I've had enough. The hype train around AI is just writing cheques the technology simply cannot cash. I'm not saying it doesn't have its uses, it does. Please don't read this post and hear things I'm not saying. I'm just fed up with the "AI is inevitable, developers are out of jobs in 2026" crowd. I didn't jump up and down saying all lawyers were out of jobs when an LLM passed the bar exam. I didn't say journalists and writers were doomed when an LLM could write something that soundly vaguely like a journalist would. Why? Because I recognised there was a massive delta between imitating a journalist/writer, and actually being a one. That's called nuance, I'm hoping the Internet learns about it one day.

A lot of people seem to think that if the code compiles that the job is 90% done. The truth is, writing code that can compile and pass a test is literally only maybe 10-20% of my job. If the goal was simply to write something that could compile and pass a test, yes, I would indeed hand in my badge and go find a beach to retire on. If the job of a laywer was to pass a bar exam, they too would all retire. If the job of a journalist was to put the right amount of paragraphs into articles, they would too. That simply isn't how development, lawyering or writing works. Allow me to explain via two examples.

Let's start simple. There was a crash in Robot Rocket that a customer sent in. It was an unsymbolicated C++ crash so I turned to Gemini CLI to try and help me diagnose it. I told it where to find the crash ips file, what the issue was and that I wanted to know which part of my code might be responsible. In about a minute it figured out the exact line, and offerred a fix. It wanted to add a null check in front of a null variable to fix the crash. And dear reader, that would indeed have fixed the crash. Job done, right? Wrong. That's literally what I'd expect out of a developer fresh out of the education system and in their very first day at work. Because as any half decent developer knows when a crash occurs your job isn't actually to fix it. It's to determine why it happened, and what the correct way to fix it is. The code crashed because you didn't anticipate something. In this case I wrongly assumed that a method called "initialize" in an API would be called before other methods, and I was wrong. In other words, just adding a null check was ignoring the fact that the method it crashed in was setting up some configuration on an object. If you just add a null check, guess what, no configuration and suddenly you have introduced a very subtle bug that will take you much longer to find. This would lead to someone saving some important config for say a live gig with your app, opening it at the gig, and not realising half their config might not have been loaded properly. Now could I have sat there prompting an LLM into fixing it properly, sure, but I bring this example up for a reason. The LLM isn't a developer. It doesn't think like one. It doesn't have enough context to reason properly through complex projects and what the implications of making changes truly are. It's only good at imitating what a developer does.

But enough simple examples, let's go concrete. A few years ago I wanted to build my own simple podcast app, for just me. I wanted to be able to add podcasts, have their episodes sorted nicely in a list and be able to play them easily at any time. I wanted to support streaming and download. I wanted to support CarPlay. I wanted it to be so simple and bullet proof that I'd never have to think about using it. So I sat down and over the space of a week or two I coded it up. I've used it ever since and it has indeed been bullet proof. Maybe once or twice a year I might clean something up or tweak something but after those initial 2 weeks I haven't significantly changed any part of this app. It's just worked the way I wanted it to.

And isn't this exactly what the AI hype train is promising? That the AI is now good enough to do exactly the above. To replace me, to be a developer. So if that's true, here's my challenge: I want you to build an app like this for yourself. I want you to prompt (no coding allowed) your way to a fully featured podcast app. I'll even give you mine as a template:

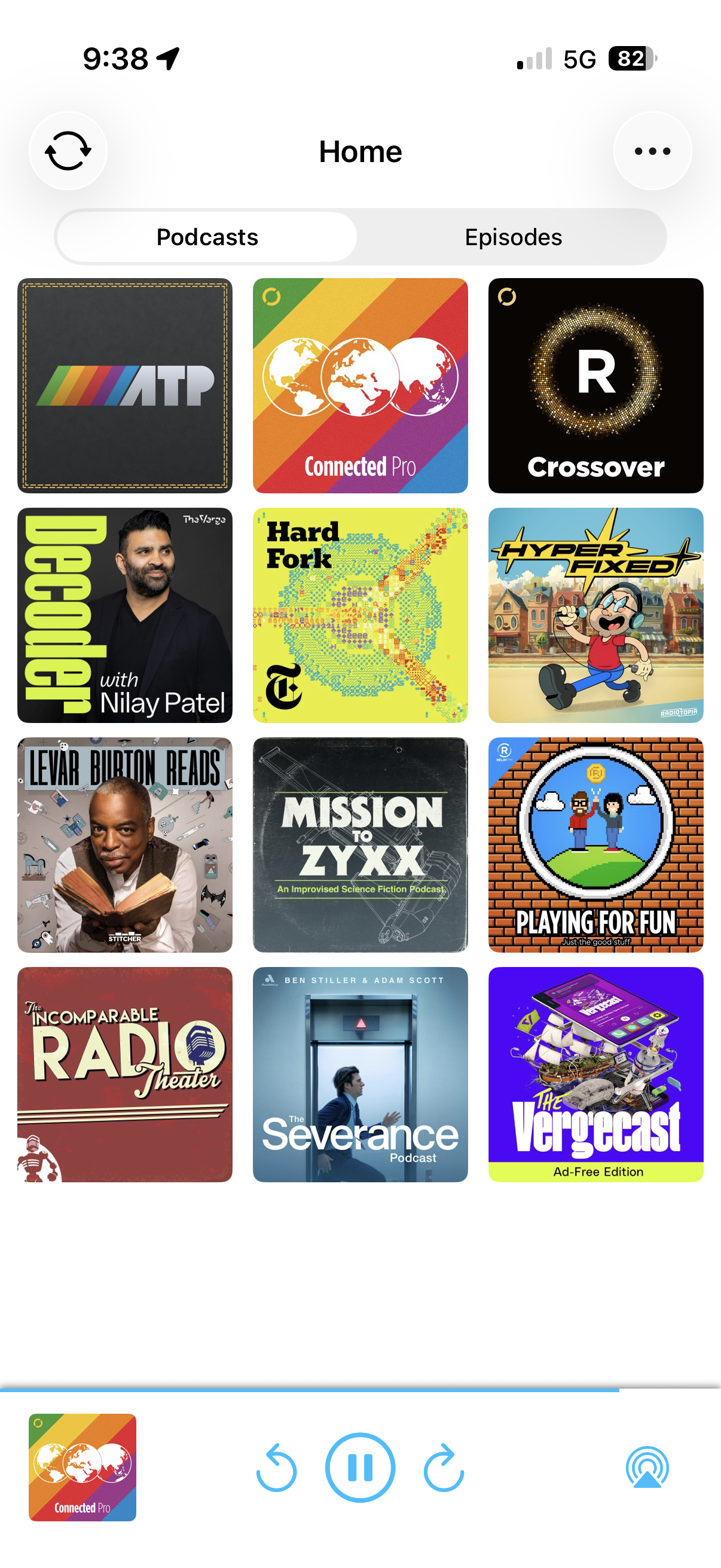

It's a super simple 2 tab design. The first tab shows all the podcasts you've added:

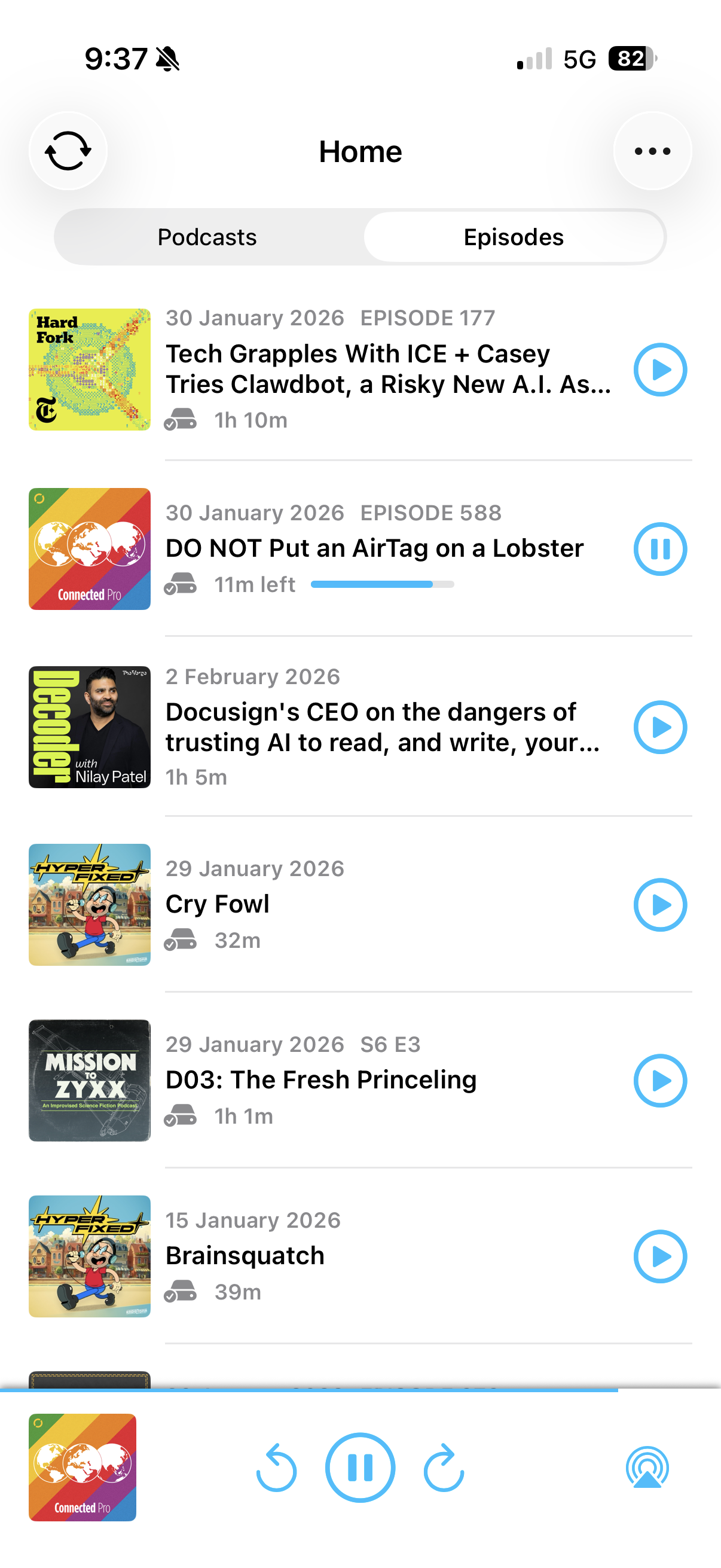

The second tab shows your episodes:

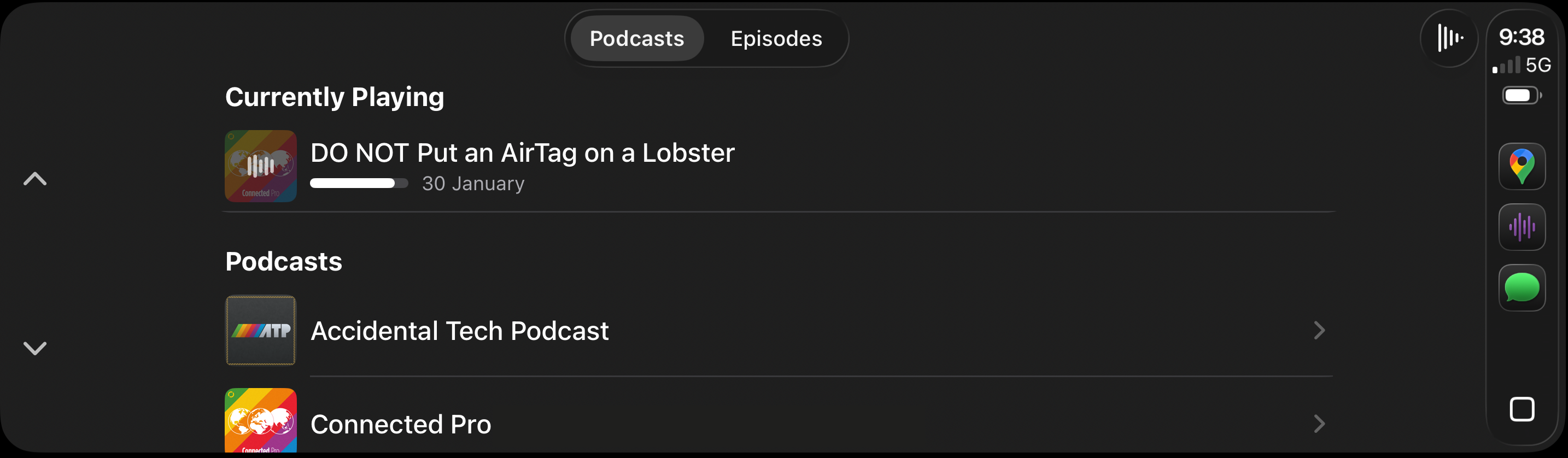

And finally there's the CarPlay integration, again, not complicated:

And if you manage to get to a fully featured, bug free app that does all of the above and you love using each and every day then I will be forced to admit that LLMs are far more capable than I am giving them credit for. Again, don't hear what I'm not saying. I don't care if you get 70% of the way there and it looks kind of like my app. That's not impressive, that's an LLM imitating what I did, not actually doing it. I want a 100% complete and working app. And I want it to be built by a non developer. Someone who doesn't really know how to code, but knows how a podcast app should work. Because that kind of person would be able to hire a developer and be able to reproduce the above. So if the LLM is now good enough to be a developer, and replace one, then it should be able to do this without any issues.

If you've managed to do that, please reach out to me on Mastodon and claim your trophy. After all, perhaps I'm just holding it wrong?